Datasets:

Tasks:

Depth Estimation

Modalities:

Image

Formats:

imagefolder

Languages:

English

Size:

10K - 100K

ArXiv:

License:

File size: 16,246 Bytes

40ca4dd 6c0a7ef 0699632 cd65043 291a3db cd65043 291a3db cd65043 291a3db cd65043 291a3db cd65043 0699632 6c0a7ef 0699632 6c0a7ef 291a3db 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef 0699632 6c0a7ef cd65043 40ca4dd 2eb8d9a 40ca4dd |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 |

---

configs:

- config_name: DurLAR_20210716_S

data_files:

- split: image_01

path:

- "DurLAR_20210716_S/image_01/data/*.png"

- split: image_02

path:

- "DurLAR_20210716_S/image_02/data/*.png"

- split: ambient

path:

- "DurLAR_20210716_S/ambient/data/*.png"

- split: reflectivity

path:

- "DurLAR_20210716_S/reflec/data/*.png"

- config_name: DurLAR_20210901_S

data_files:

- split: image_01

path:

- "DurLAR_20210901_S/image_01/data/*.png"

- split: image_02

path:

- "DurLAR_20210901_S/image_02/data/*.png"

- split: ambient

path:

- "DurLAR_20210901_S/ambient/data/*.png"

- split: reflectivity

path:

- "DurLAR_20210901_S/reflec/data/*.png"

- config_name: DurLAR_20211012_S

data_files:

- split: image_01

path:

- "DurLAR_20211012_S/image_01/data/*.png"

- split: image_02

path:

- "DurLAR_20211012_S/image_02/data/*.png"

- split: ambient

path:

- "DurLAR_20211012_S/ambient/data/*.png"

- split: reflectivity

path:

- "DurLAR_20211012_S/reflec/data/*.png"

- config_name: DurLAR_20211208_S

data_files:

- split: image_01

path:

- "DurLAR_20211208_S/image_01/data/*.png"

- split: image_02

path:

- "DurLAR_20211208_S/image_02/data/*.png"

- split: ambient

path:

- "DurLAR_20211208_S/ambient/data/*.png"

- split: reflectivity

path:

- "DurLAR_20211208_S/reflec/data/*.png"

- config_name: DurLAR_20211209_S

data_files:

- split: image_01

path:

- "DurLAR_20211209_S/image_01/data/*.png"

- split: image_02

path:

- "DurLAR_20211209_S/image_02/data/*.png"

- split: ambient

path:

- "DurLAR_20211209_S/ambient/data/*.png"

- split: reflectivity

path:

- "DurLAR_20211209_S/reflec/data/*.png"

license: cc-by-4.0

task_categories:

- depth-estimation

language:

- en

pretty_name: DurLAR Dataset - exemplar dataset (600 frames)

size_categories:

- n<1K

extra_gated_prompt: >-

By clicking on “Access repository” below, you also agree to the DurLAR Terms of Access:

[RESEARCHER_FULLNAME] (the “Researcher”) has requested permission to use the DurLAR dataset (the “Dataset”), collected by Durham University. In exchange for such permission, Researcher hereby agrees to the following terms and conditions:

1. Researcher shall use the Dataset only for non-commercial research and educational purposes.

2. Durham University and Hugging Face make no representations or warranties regarding the Dataset, including but not limited to warranties of non-infringement or fitness for a particular purpose.

3. Researcher accepts full responsibility for their use of the Dataset and shall defend and indemnify Durham University and Hugging Face, including their employees, officers, and agents, against any and all claims arising from Researcher’s use of the Dataset.

4. Researcher may provide research associates and colleagues with access to the Dataset only if they first agree to be bound by these terms and conditions.

5. Durham University and Hugging Face reserve the right to terminate Researcher’s access to the Dataset at any time.

6. If Researcher is employed by a for-profit, commercial entity, their employer shall also be bound by these terms and conditions, and Researcher hereby represents that they are fully authorized to enter into this agreement on behalf of such employer.

7. Researcher agrees to cite the following paper in any work that uses the DurLAR dataset or any portion of it:

DurLAR: A High-fidelity 128-channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-modal Autonomous Driving Applications

(Li Li, Khalid N. Ismail, Hubert P. H. Shum, and Toby P. Breckon), In Int. Conf. 3D Vision, 2021.

8. The laws of the United Kingdom shall apply to all disputes under this agreement.

---

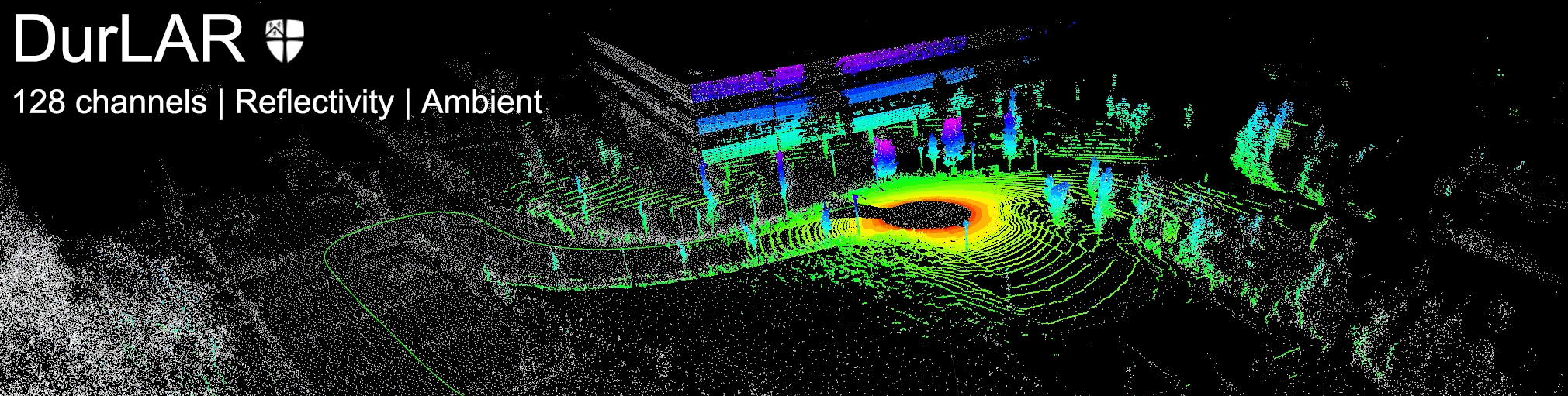

# DurLAR: A High-Fidelity 128-Channel LiDAR Dataset

## News

- [2024/12/05] We provide the **intrinsic parameters** of our OS1-128 LiDAR [[download]](https://github.com/l1997i/DurLAR/raw/refs/heads/main/os1-128.json).

## Sensor placement

- **LiDAR**: [Ouster OS1-128 LiDAR sensor](https://ouster.com/products/os1-lidar-sensor/) with 128 channels vertical resolution

- **Stereo** Camera: [Carnegie Robotics MultiSense S21 stereo camera](https://carnegierobotics.com/products/multisense-s21/) with grayscale, colour, and IR enhanced imagers, 2048x1088 @ 2MP resolution

- **GNSS/INS**: [OxTS RT3000v3](https://www.oxts.com/products/rt3000-v3/) global navigation satellite and inertial navigation system, supporting localization from GPS, GLONASS, BeiDou, Galileo, PPP and SBAS constellations

- **Lux Meter**: [Yocto Light V3](http://www.yoctopuce.com/EN/products/usb-environmental-sensors/yocto-light-v3), a USB ambient light sensor (lux meter), measuring ambient light up to 100,000 lux

## Panoramic Imagery

<br>

<p align="center">

<img src="https://github.com/l1997i/DurLAR/blob/main/reflect_center.gif?raw=true" width="100%"/>

<h5 id="title" align="center">Reflectivity imagery</h5>

</br>

<br>

<p align="center">

<img src="https://github.com/l1997i/DurLAR/blob/main/ambient_center.gif?raw=true" width="100%"/>

<h5 id="title" align="center">Ambient imagery</h5>

</br>

## File Description

Each file contains 8 topics for each frame in DurLAR dataset,

- `ambient/`: panoramic ambient imagery

- `reflec/`: panoramic reflectivity imagery

- `image_01/`: right camera (grayscale+synced+rectified)

- `image_02/`: left RGB camera (synced+rectified)

- `ouster_points`: ouster LiDAR point cloud (KITTI-compatible binary format)

- `gps`, `imu`, `lux`: csv file format

The structure of the provided DurLAR full dataset zip file,

```

DurLAR_<date>/

├── ambient/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── gps/

│ └── data.csv

├── image_01/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── image_02/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── imu/

│ └── data.csv

├── lux/

│ └── data.csv

├── ouster_points/

│ ├── data/

│ │ └── <frame_number.bin> [ ..... ]

│ └── timestamp.txt

├── reflec/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

└── readme.md [ this README file ]

```

The structure of the provided calibration zip file,

```

DurLAR_calibs/

├── calib_cam_to_cam.txt [ Camera to camera calibration results ]

├── calib_imu_to_lidar.txt [ IMU to LiDAR calibration results ]

└── calib_lidar_to_cam.txt [ LiDAR to camera calibration results ]

```

## Get Started

- [Download the **calibration files**](https://github.com/l1997i/DurLAR/raw/main/DurLAR_calibs.zip)

- [Download the **calibration files** (v2, targetless)](https://github.com/l1997i/DurLAR/raw/main/DurLAR_calibs_v2.zip)

- [Download the **exemplar ROS bag** (for targetless calibration)](https://durhamuniversity-my.sharepoint.com/:f:/g/personal/mznv82_durham_ac_uk/Ei28Yy-Gb_BKoavvJ6R_jLcBfTZ_xM5cZhEFgMFNK9HhyQ?e=rxPgI9)

- [Download the **exemplar dataset** (600 frames)](https://collections.durham.ac.uk/collections/r2gq67jr192)

- [Download the **full dataset**](https://github.com/l1997i/DurLAR?tab=readme-ov-file#access-for-the-full-dataset) (Fill in the form to request access to the full dataset)

> Note that [we did not include CSV header information](https://github.com/l1997i/DurLAR/issues/9) in the [**exemplar dataset** (600 frames)](https://collections.durham.ac.uk/collections/r2gq67jr192). You can refer to [Header of csv files](https://github.com/l1997i/DurLAR?tab=readme-ov-file#header-of-csv-files) to get the first line of the `csv` files.

> **calibration files** (v2, targetless): Following the publication of the proposed DurLAR dataset and the corresponding paper, we identify a more advanced [targetless calibration method](https://github.com/koide3/direct_visual_lidar_calibration) ([#4](https://github.com/l1997i/DurLAR/issues/4)) that surpasses the LiDAR-camera calibration technique previously employed. We provide [**exemplar ROS bag**](https://durhamuniversity-my.sharepoint.com/:f:/g/personal/mznv82_durham_ac_uk/Ei28Yy-Gb_BKoavvJ6R_jLcBfTZ_xM5cZhEFgMFNK9HhyQ?e=rxPgI9) for [targetless calibration](https://github.com/koide3/direct_visual_lidar_calibration), and also corresponding [calibration results (v2)](https://github.com/l1997i/DurLAR/raw/main/DurLAR_calibs_v2.zip). Please refer to [Appendix (arXiv)](https://arxiv.org/pdf/2406.10068) for more details.

### Access to the full dataset

Access to the complete DurLAR dataset can be requested through **one** of the following ways. 您可任选以下其中**任意**链接申请访问完整数据集。

[1. Access for the full dataset](https://forms.gle/ZjSs3PWeGjjnXmwg9)

[2. 申请访问完整数据集](https://wj.qq.com/s2/9459309/4cdd/)

### Usage of the downloading script

Upon completion of the form, the download script `durlar_download` and accompanying instructions will be **automatically** provided. The DurLAR dataset can then be downloaded via the command line.

For the first use, it is highly likely that the `durlar_download` file will need to be made

executable:

``` bash

chmod +x durlar_download

```

By default, this script downloads the small subset for simple testing. Use the following command:

```bash

./durlar_download

```

It is also possible to select and download various test drives:

```

usage: ./durlar_download [dataset_sample_size] [drive]

dataset_sample_size = [ small | medium | full ]

drive = 1 ... 5

```

Given the substantial size of the DurLAR dataset, please download the complete dataset

only when necessary:

```bash

./durlar_download full 5

```

Throughout the entire download process, it is important that your network remains

stable and free from any interruptions. In the event of network issues, please delete all

DurLAR dataset folders and rerun the download script. Currently, our script supports

only Ubuntu (tested on Ubuntu 18.04 and Ubuntu 20.04, amd64). For downloading the

DurLAR dataset on other operating systems, please refer to [Durham Collections](https://collections.durham.ac.uk/collections/r2gq67jr192) for instructions.

## CSV format for `imu`, `gps`, and `lux` topics

### Format description

Our `imu`, `gps`, and `lux` data are all in `CSV` format. The **first row** of the `CSV` file contains headers that **describe the meaning of each column**. Taking `imu` csv file for example (only the first 9 rows are displayed),

1. `%time`: Timestamps in Unix epoch format.

2. `field.header.seq`: Sequence numbers.

3. `field.header.stamp`: Header timestamps.

4. `field.header.frame_id`: Frame of reference, labeled as "gps".

5. `field.orientation.x`: X-component of the orientation quaternion.

6. `field.orientation.y`: Y-component of the orientation quaternion.

7. `field.orientation.z`: Z-component of the orientation quaternion.

8. `field.orientation.w`: W-component of the orientation quaternion.

9. `field.orientation_covariance0`: Covariance of the orientation data.

### Header of `csv` files

The first line of the `csv` files is shown as follows.

For the GPS,

```csv

time,field.header.seq,field.header.stamp,field.header.frame_id,field.status.status,field.status.service,field.latitude,field.longitude,field.altitude,field.position_covariance0,field.position_covariance1,field.position_covariance2,field.position_covariance3,field.position_covariance4,field.position_covariance5,field.position_covariance6,field.position_covariance7,field.position_covariance8,field.position_covariance_type

```

For the IMU,

```

time,field.header.seq,field.header.stamp,field.header.frame_id,field.orientation.x,field.orientation.y,field.orientation.z,field.orientation.w,field.orientation_covariance0,field.orientation_covariance1,field.orientation_covariance2,field.orientation_covariance3,field.orientation_covariance4,field.orientation_covariance5,field.orientation_covariance6,field.orientation_covariance7,field.orientation_covariance8,field.angular_velocity.x,field.angular_velocity.y,field.angular_velocity.z,field.angular_velocity_covariance0,field.angular_velocity_covariance1,field.angular_velocity_covariance2,field.angular_velocity_covariance3,field.angular_velocity_covariance4,field.angular_velocity_covariance5,field.angular_velocity_covariance6,field.angular_velocity_covariance7,field.angular_velocity_covariance8,field.linear_acceleration.x,field.linear_acceleration.y,field.linear_acceleration.z,field.linear_acceleration_covariance0,field.linear_acceleration_covariance1,field.linear_acceleration_covariance2,field.linear_acceleration_covariance3,field.linear_acceleration_covariance4,field.linear_acceleration_covariance5,field.linear_acceleration_covariance6,field.linear_acceleration_covariance7,field.linear_acceleration_covariance8

```

For the LUX,

```csv

time,field.header.seq,field.header.stamp,field.header.frame_id,field.illuminance,field.variance

```

### To process the `csv` files

To process the `csv` files, you can use multiple ways. For example,

**Python**: Use the pandas library to read the CSV file with the following code:

```python

import pandas as pd

df = pd.read_csv('data.csv')

print(df)

```

**Text Editors**: Simple text editors like `Notepad` (Windows) or `TextEdit` (Mac) can also open `CSV` files, though they are less suited for data analysis.

## Folder \#Frame Verification

For easy verification of folder data and integrity, we provide the number of frames in each drive folder, as well as the [MD5 checksums](https://collections.durham.ac.uk/collections/r2gq67jr192?utf8=%E2%9C%93&cq=MD5&sort=) of the zip files.

| Folder | # of Frames |

|----------|-------------|

| 20210716 | 41993 |

| 20210901 | 23347 |

| 20211012 | 28642 |

| 20211208 | 26850 |

| 20211209 | 25079 |

|**total** | **145911** |

## Intrinsic Parameters of Our Ouster OS1-128 LiDAR

The intrinsic JSON file of our LiDAR can be downloaded at [this link](https://github.com/l1997i/DurLAR/raw/refs/heads/main/os1-128.json). For more information, visit the [official user manual of OS1-128](https://data.ouster.io/downloads/software-user-manual/firmware-user-manual-v3.1.0.pdf).

Please note that **sensitive information, such as the serial number and unique device ID, has been redacted** (indicated as XXXXXXX).

---

## Reference

If you are making use of this work in any way (including our dataset and toolkits), you must please reference the following paper in any report, publication, presentation, software release or any other associated materials:

[DurLAR: A High-fidelity 128-channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-modal Autonomous Driving Applications](https://dro.dur.ac.uk/34293/)

(Li Li, Khalid N. Ismail, Hubert P. H. Shum and Toby P. Breckon), In Int. Conf. 3D Vision, 2021. [[pdf](https://www.l1997i.com/assets/pdf/li21durlar_arxiv_compressed.pdf)] [[video](https://youtu.be/1IAC9RbNYjY)][[poster](https://www.l1997i.com/assets/pdf/li21durlar_poster_v2_compressed.pdf)]

```

@inproceedings{li21durlar,

author = {Li, L. and Ismail, K.N. and Shum, H.P.H. and Breckon, T.P.},

title = {DurLAR: A High-fidelity 128-channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-modal Autonomous Driving Applications},

booktitle = {Proc. Int. Conf. on 3D Vision},

year = {2021},

month = {December},

publisher = {IEEE},

keywords = {autonomous driving, dataset, high-resolution LiDAR, flash LiDAR, ground truth depth, dense depth, monocular depth estimation, stereo vision, 3D},

category = {automotive 3Dvision},

}

```

---

|