placeholder files (v1.0)

Browse files- .gitattributes +6 -0

- README.md +332 -3

- aifs-single-mse-1.0.ckpt +3 -0

- assets/aifs_diagram.png +3 -0

- assets/decoder_graph.jpeg +3 -0

- assets/encoder_graph.jpeg +3 -0

- assets/radiation_cloudcover.gif +3 -0

- assets/scorecard_single1.0_vs_ifs_2024.png +3 -0

- assets/scorecard_single1.0_vs_single0.2.1_2023.png +3 -0

- config_finetuning.yaml +483 -0

- config_pretraining.yaml +492 -0

- run_AIFS_v1.ipynb +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,9 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/aifs_diagram.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/decoder_graph.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/encoder_graph.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

assets/radiation_cloudcover.gif filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

assets/scorecard_single1.0_vs_ifs_2024.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

assets/scorecard_single1.0_vs_single0.2.1_2023.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,332 @@

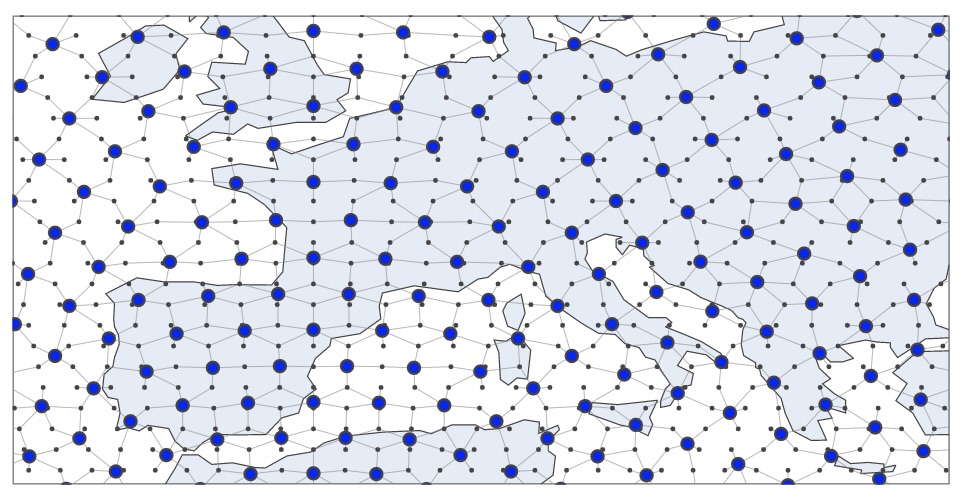

|

|

| 1 |

-

---

|

| 2 |

-

license: cc-by-4.0

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: cc-by-4.0

|

| 3 |

+

metrics:

|

| 4 |

+

- mse

|

| 5 |

+

pipeline_tag: graph-ml

|

| 6 |

+

language:

|

| 7 |

+

- en

|

| 8 |

+

library_name: anemoi

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# AIFS Single - v1.0

|

| 12 |

+

|

| 13 |

+

<!-- Provide a quick summary of what the model is/does. -->

|

| 14 |

+

|

| 15 |

+

Here, we introduce the **Artificial Intelligence Forecasting System (AIFS)**, a data driven forecast

|

| 16 |

+

model developed by the European Centre for Medium-Range Weather Forecasts (ECMWF).

|

| 17 |

+

|

| 18 |

+

The release of AIFS Single v1.0 marks the first operationally supported AIFS model. Version 1

|

| 19 |

+

supersedes the existing experimental version, [0.2.1 AIFS-single](https://huggingface.co/ecmwf/aifs-single-0.2.1).

|

| 20 |

+

The new version, 1.0, brings changes to the AIFS Single model, including among many others:

|

| 21 |

+

|

| 22 |

+

- Improved performance for upper-level atmospheric variables (AIFS Single still uses 13 pressure-levels, so this improvement mainly refers to 50 and 100 hPa)

|

| 23 |

+

- Improved skill for total precipitation.

|

| 24 |

+

- Additional output variables, including 100 meter winds, snow-fall, surface solar-radiation and land variables such as soil-moisture and soil-temperature.

|

| 25 |

+

|

| 26 |

+

<div style="display: flex; justify-content: center;">

|

| 27 |

+

<img src="assets/radiation_cloudcover.gif" alt="AIFS 10 days Forecast" style="width: 50%;"/>

|

| 28 |

+

</div>

|

| 29 |

+

|

| 30 |

+

AIFS produces highly skilled forecasts for upper-air variables, surface weather parameters and

|

| 31 |

+

tropical cyclone tracks. AIFS Single is run four times daily alongside ECMWF’s physics-based NWP model and forecasts

|

| 32 |

+

are available to the public under ECMWF’s open data policy (https://www.ecmwf.int/en/forecasts/datasets/open-data).

|

| 33 |

+

Note that due to the non-determinism of GPUs, users will be unable to exactly reproduce an official AIFS forecast

|

| 34 |

+

when running AIFS Single themselves.

|

| 35 |

+

|

| 36 |

+

For more details please refer to https://confluence.ecmwf.int/display/FCST/Implementation+of+AIFS+Single+v1

|

| 37 |

+

|

| 38 |

+

## Data Details

|

| 39 |

+

|

| 40 |

+

### Data parameters

|

| 41 |

+

|

| 42 |

+

#### New parameters

|

| 43 |

+

|

| 44 |

+

More detailed information about the new parameters introduced with AIFS Single v1.0 is provided in the table below.

|

| 45 |

+

|

| 46 |

+

| Short Name | Name | Units | Component Type | Lev.Type |

|

| 47 |

+

|:----------:|:----:|:-----:|:--------------:|:--------:|

|

| 48 |

+

| ssrd | Surface short-wave (solar) radiation downwards | \\(J m^{-2}\\) | AIFS | sfc |

|

| 49 |

+

| strd | Surface long-wave (thermal) radiation downwards | \\(J m^{-2}\\) | AIFS | sfc |

|

| 50 |

+

| lcc | Low cloud cover | \\((0 - 1)\\) | AIFS | sfc |

|

| 51 |

+

| mcc | Medium cloud cover | \\((0 - 1)\\) | AIFS | sfc |

|

| 52 |

+

| hcc | High cloud cover | \\((0 - 1)\\) | AIFS | sfc |

|

| 53 |

+

| sf | Snowfall water equivalent | \\(kg m^{-2}\\) | AIFS | sfc |

|

| 54 |

+

| tcc | Total cloud cover | \\((0 - 1)\\) | AIFS | sfc |

|

| 55 |

+

| 100u | 100 metre U wind component | \\(m s^{-1}\\) | AIFS | sfc |

|

| 56 |

+

| 100v | 100 metre V wind component | \\(m s^{-1}\\) | AIFS | sfc |

|

| 57 |

+

| rowe | Runoff water equivalent (surface plus subsurface) | \\(kg m^{-2}\\) | AIFS | sfc |

|

| 58 |

+

| vsw | Volumetric soil moisture | \\(m^3 m^{-3}\\) | AIFS | sol |

|

| 59 |

+

| sot | Soil temperature | \\(K\\) | AIFS | sol |

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

#### Changes to existing parameters

|

| 63 |

+

|

| 64 |

+

There are no changes to existing parameters already introduced with AIFS Single v0.2.1.

|

| 65 |

+

|

| 66 |

+

**Note**

|

| 67 |

+

Regarding precipitation units, it's worth noting that AIFS model was trained on \\(m^{3}/m^{2}\\) and will therefore produce precip in that units.

|

| 68 |

+

If one wants to retrieve precipitation from Open data, the units will be \\(mm\\).

|

| 69 |

+

|

| 70 |

+

#### Discontinued parameters

|

| 71 |

+

|

| 72 |

+

No parameters have been discontinued with regards to the previous version of AIFS Single v0.2.1.

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

## Model Details

|

| 76 |

+

|

| 77 |

+

### Model Description

|

| 78 |

+

|

| 79 |

+

<!-- Provide a longer summary of what this model is. -->

|

| 80 |

+

|

| 81 |

+

AIFS is based on a graph neural network (GNN) encoder and decoder, and a sliding window transformer processor,

|

| 82 |

+

and is trained on ECMWF’s ERA5 re-analysis and ECMWF’s operational numerical weather prediction (NWP) analyses.

|

| 83 |

+

|

| 84 |

+

<div style="display: flex; justify-content: center;">

|

| 85 |

+

<img src="assets/encoder_graph.jpeg" alt="Encoder graph" style="width: 50%;"/>

|

| 86 |

+

<img src="assets/decoder_graph.jpeg" alt="Decoder graph" style="width: 50%;"/>

|

| 87 |

+

</div>

|

| 88 |

+

|

| 89 |

+

It has a flexible and modular design and supports several levels of parallelism to enable training on

|

| 90 |

+

high resolution input data. AIFS forecast skill is assessed by comparing its forecasts to NWP analyses

|

| 91 |

+

and direct observational data.

|

| 92 |

+

|

| 93 |

+

- **Developed by:** ECMWF

|

| 94 |

+

- **Model type:** Encoder-processor-decoder model

|

| 95 |

+

- **License:** These model weights are published under a Creative Commons Attribution 4.0 International (CC BY 4.0).

|

| 96 |

+

To view a copy of this licence, visit https://creativecommons.org/licenses/by/4.0/.

|

| 97 |

+

The notebooks and other script files are published under an Apache 2.0 licence, to view a copy of this license, visit https://www.apache.org/licenses/LICENSE-2.0.txt.

|

| 98 |

+

|

| 99 |

+

### Model resolution

|

| 100 |

+

|

| 101 |

+

There are no changes in resolution compared to previous version AIFS Single v0.2.1.

|

| 102 |

+

|

| 103 |

+

| | Component | Horizontal Resolution [kms] | Vertical Resolution [levels] |

|

| 104 |

+

|---|:---:|:---:|:---:|

|

| 105 |

+

| Atmosphere | AIFS-single v1.0 | ~ 31 | 13 |

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

### Model Sources

|

| 109 |

+

|

| 110 |

+

<!-- Provide the basic links for the model. -->

|

| 111 |

+

|

| 112 |

+

- **Repository:** [Anemoi](https://anemoi.readthedocs.io/en/latest/index.html) is an open-source framework for

|

| 113 |

+

creating machine learning (ML) weather forecasting systems, which ECMWF and a range of national meteorological

|

| 114 |

+

services across Europe have co-developed.

|

| 115 |

+

- **Paper:** https://arxiv.org/pdf/2406.01465

|

| 116 |

+

|

| 117 |

+

## How to Get Started with the Model

|

| 118 |

+

|

| 119 |

+

To generate a new forecast using AIFS, you can use [anemoi-inference](https://github.com/ecmwf/anemoi-inference). In the [following notebook](run_AIFS_v1.ipynb), a

|

| 120 |

+

step-by-step workflow is specified to run the AIFS using the HuggingFace model:

|

| 121 |

+

|

| 122 |

+

1. **Install Required Packages and Imports**

|

| 123 |

+

2. **Retrieve Initial Conditions from ECMWF Open Data**

|

| 124 |

+

- Select a date

|

| 125 |

+

- Get the data from the [ECMWF Open Data API](https://www.ecmwf.int/en/forecasts/datasets/open-data)

|

| 126 |

+

- Get input fields

|

| 127 |

+

- Add the single levels fields and pressure levels fields

|

| 128 |

+

- Convert geopotential height into geopotential

|

| 129 |

+

- Create the initial state

|

| 130 |

+

3. **Load the Model and Run the Forecast**

|

| 131 |

+

- Download the Model's Checkpoint from Hugging Face

|

| 132 |

+

- Create a runner

|

| 133 |

+

- Run the forecast using anemoi-inference

|

| 134 |

+

4. **Inspect the generated forecast**

|

| 135 |

+

- Plot a field

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

🚨 **Note** we train AIFS using `flash_attention` (https://github.com/Dao-AILab/flash-attention).

|

| 139 |

+

The use of 'Flash Attention' package also imposes certain requirements in terms of software and hardware. Those can be found under #Installation and Features in https://github.com/Dao-AILab/flash-attention

|

| 140 |

+

|

| 141 |

+

🚨 **Note** the `aifs_single_v1.0.ckpt` checkpoint just contains the model’s weights.

|

| 142 |

+

That file does not contain any information about the optimizer states, lr-scheduler states, etc.

|

| 143 |

+

|

| 144 |

+

## How to train AIFS Single v1.0

|

| 145 |

+

|

| 146 |

+

To train this model you can use the configuration files included in this repository and the following Anemoi packages:

|

| 147 |

+

|

| 148 |

+

```

|

| 149 |

+

anemoi-training==0.3.1

|

| 150 |

+

anemoi-models==0.4.0

|

| 151 |

+

anemoi-graphs==0.4.4

|

| 152 |

+

```

|

| 153 |

+

and run the pretraining stage as follows,

|

| 154 |

+

|

| 155 |

+

```

|

| 156 |

+

export DATASETS_PATH=???????

|

| 157 |

+

export OUTPUT_PATH=???????

|

| 158 |

+

anemoi-training train --config-name=config_pretraining.yaml

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

Now, you can fine-tune your model for rollout using the `run_id` of your previous run,

|

| 162 |

+

Note - this run_id refers to the run_id and you can find it looking at the checkpoint folder path.

|

| 163 |

+

For more details, please refer to https://anemoi.readthedocs.io/projects/training/en/latest/user-guide/training.html#restarting-a-training-run

|

| 164 |

+

|

| 165 |

+

```

|

| 166 |

+

export PRETRAINING_RUN_ID=???????

|

| 167 |

+

anemoi-training train --config-name=config_finetuning.yaml

|

| 168 |

+

```

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

## Training Details

|

| 172 |

+

|

| 173 |

+

### Training Data

|

| 174 |

+

|

| 175 |

+

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

|

| 176 |

+

|

| 177 |

+

AIFS is trained to produce 6-hour forecasts. It receives as input a representation of the atmospheric states

|

| 178 |

+

at \\(t_{−6h}\\), \\(t_{0}\\), and then forecasts the state at time \\(t_{+6h}\\).

|

| 179 |

+

|

| 180 |

+

<div style="display: flex; justify-content: center;">

|

| 181 |

+

<img src="assets/aifs_diagram.png" alt="AIFS 2m Temperature" style="width: 80%;"/>

|

| 182 |

+

</div>

|

| 183 |

+

|

| 184 |

+

The full list of input and output fields is shown below:

|

| 185 |

+

|

| 186 |

+

| Field | Level type | Input/Output |

|

| 187 |

+

|-------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------|--------------|

|

| 188 |

+

| Geopotential, horizontal and vertical wind components, specific humidity, temperature | Pressure level: 50,100, 150, 200, 250,300, 400, 500, 600,700, 850, 925, 1000 | Both |

|

| 189 |

+

| Surface pressure, mean sea-level pressure, skin temperature, 2 m temperature, 2 m dewpoint temperature, 10 m horizontal wind components, total column water | Surface | Both |

|

| 190 |

+

| Soil moisture and soil temperature (layers 1 & 2) | Surface | Both |

|

| 191 |

+

| 100m horizontal wind components, solar radiation (Surface short-wave (solar) radiation downwards and Surface long-wave (thermal) radiation downwards), cloud variables (tcc, hcc, mcc, lcc), runoff and snow fall | Surface | Output |

|

| 192 |

+

| Total precipitation, convective precipitation | Surface | Output |

|

| 193 |

+

| Land-sea mask, orography, standard deviation of sub-grid orography, slope of sub-scale orography, insolation, latitude/longitude, time of day/day of year | Surface | Input |

|

| 194 |

+

|

| 195 |

+

Input and output states are normalised to unit variance and zero mean for each level. Some of

|

| 196 |

+

the forcing variables, like orography, are min-max normalised.

|

| 197 |

+

|

| 198 |

+

### Training Procedure

|

| 199 |

+

|

| 200 |

+

Based on the different experiments we have made - the final training recipe for AIFS Single v1.0 has deviated slightly

|

| 201 |

+

from the one used for AIFS Single v0.2.1 since we found that we could get a well trained model by skipping the ERA5

|

| 202 |

+

rollout and directly doing the rollout on the operational-analysis (extended) dataset. When we say 'extended' we refer

|

| 203 |

+

to the fact that for AIFS Single v0.2.1 we used just operational-analysis data from 2019 to 2021, while in this new

|

| 204 |

+

release we have done the fine-tunning from 2016 to 2022.

|

| 205 |

+

|

| 206 |

+

The other important change in the fine-tuning stage is that for AIFS Single v0.2.1 after the 6hr model training the

|

| 207 |

+

optimiser was not restarted (ie. rollout was done with the minimal lr of \\(3 × 10^{-7}\\)). For this release we have seen

|

| 208 |

+

that restarting the optimiser for the rollout improves the model's performance. For the operational-fine tuning rollout

|

| 209 |

+

stage, the learning rate cycle is restarted, gradually decreasing to the minimum value at the end of rollout.

|

| 210 |

+

|

| 211 |

+

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

|

| 212 |

+

|

| 213 |

+

- **Pre-training**: It was performed on ERA5 for the years 1979 to 2022 with a cosine learning rate (LR) schedule and a

|

| 214 |

+

total of 260,000 steps. The LR is increased from 0 to \\(10^{-4}\\) during the first 1000 steps, then it is annealed to a

|

| 215 |

+

minimum of \\(3 × 10^{-7}\\). The local learning rate used for this stage is \\(3.125 × 10^{-5}\\).

|

| 216 |

+

- **Fine-tuning**: The pre-training is then followed by rollout on operational real-time IFS NWP analyses for the years

|

| 217 |

+

2016 to 2022, this time with a local learning rate of \\(8 × 10^{−7}\\), which is decreased to \\(3 × 10^{−7}\\). Rollout steps

|

| 218 |

+

increase per epoch. In this second stage the warm up period of the optimiser is 100 steps to account for shorter length

|

| 219 |

+

of this stage. Optimizer step are equal to 7900 ( 12 epoch with ~630 steps per epoch).

|

| 220 |

+

|

| 221 |

+

As in the previous version of aifs-single for fine-tuning and initialisation of the model during inference, IFS fields

|

| 222 |

+

are interpolated from their native O1280 resolution (approximately \\(0.1°\\)) down to N320 (approximately \\(0.25°\\)).

|

| 223 |

+

|

| 224 |

+

#### Training Hyperparameters

|

| 225 |

+

|

| 226 |

+

- **Optimizer:** We use *AdamW* (Loshchilov and Hutter [2019]) with the \\(β\\)-coefficients set to 0.9 and 0.95.

|

| 227 |

+

|

| 228 |

+

- **Loss function:** The loss function is an area-weighted mean squared error (MSE) between the target atmospheric state

|

| 229 |

+

and prediction.

|

| 230 |

+

|

| 231 |

+

- **Loss scaling:** A loss scaling is applied for each output variable. The scaling was chosen empirically such that

|

| 232 |

+

all prognostic variables have roughly equal contributions to the loss, with the exception of the vertical velocities,

|

| 233 |

+

for which the weight was reduced. The loss weights also decrease linearly with height, which means that levels in

|

| 234 |

+

the upper atmosphere (e.g., 50 hPa) contribute relatively little to the total loss value.

|

| 235 |

+

|

| 236 |

+

#### Speeds, Sizes, Times

|

| 237 |

+

|

| 238 |

+

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

|

| 239 |

+

|

| 240 |

+

Data parallelism is used for training, with a batch size of 16. One model instance is split across four 40GB A100

|

| 241 |

+

GPUs within one node. Training is done using mixed precision (Micikevicius et al. [2018]), and the entire process

|

| 242 |

+

takes about one week, with 64 GPUs in total. The checkpoint size is 1.19 GB and as mentioned above, it does not include the optimizer

|

| 243 |

+

state.

|

| 244 |

+

|

| 245 |

+

## Evaluation

|

| 246 |

+

|

| 247 |

+

<!-- This section describes the evaluation protocols and provides the results. -->

|

| 248 |

+

|

| 249 |

+

AIFS is evaluated against ECMWF IFS (Integrated Forecast System) for 2022. The results of such evaluation are summarized in

|

| 250 |

+

the scorecard below that compares different forecast skill measures across a range of

|

| 251 |

+

variables. For verification, each system is compared against the operational ECMWF analysis from which the forecasts

|

| 252 |

+

are initialised. In addition, the forecasts are compared against radiosonde observations of geopotential, temperature

|

| 253 |

+

and windspeed, and SYNOP observations of 2 m temperature, 10 m wind and 24 h total precipitation. The definition

|

| 254 |

+

of the metrics, such as ACC (ccaf), RMSE (rmsef) and forecast activity (standard deviation of forecast anomaly,

|

| 255 |

+

sdaf) can be found in e.g Ben Bouallegue et al. ` [2024].

|

| 256 |

+

|

| 257 |

+

### AIFS Single v1.0 vs AIFS Single v0.2.1 (2023)

|

| 258 |

+

|

| 259 |

+

<div style="display: flex; justify-content: center;">

|

| 260 |

+

<img src="assets/scorecard_single1.0_vs_single0.2.1_2023.png" alt="Scorecard comparing forecast scores of AIFS versus IFS (2022)" style="width: 80%;"/>

|

| 261 |

+

</div>

|

| 262 |

+

|

| 263 |

+

### AIFS Single v1.0 vs IFS (2024)

|

| 264 |

+

|

| 265 |

+

<div style="display: flex; justify-content: center;">

|

| 266 |

+

<img src="assets/scorecard_single1.0_vs_ifs_2024.png" alt="Scorecard comparing forecast scores of AIFS versus IFS (2022)" style="width: 80%;"/>

|

| 267 |

+

</div>

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

Forecasts are initialised on 00 and 12 UTC. The scorecard show relative score changes as function of lead time (day 1 to 10) for northern extra-tropics (n.hem),

|

| 271 |

+

southern extra-tropics (s.hem), tropics and Europe. Blue colours mark score improvements and red colours score

|

| 272 |

+

degradations. Purple colours indicate an increased in standard deviation of forecast anomaly, while green colours

|

| 273 |

+

indicate a reduction. Framed rectangles indicate 95% significance level. Variables are geopotential (z), temperature

|

| 274 |

+

(t), wind speed (ff), mean sea level pressure (msl), 2 m temperature (2t), 10 m wind speed (10ff) and 24 hr total

|

| 275 |

+

precipitation (tp). Numbers behind variable abbreviations indicate variables on pressure levels (e.g., 500 hPa), and

|

| 276 |

+

suffix indicates verification against IFS NWP analyses (an) or radiosonde and SYNOP observations (ob). Scores

|

| 277 |

+

shown are anomaly correlation (ccaf), SEEPS (seeps, for precipitation), RMSE (rmsef) and standard deviation of

|

| 278 |

+

forecast anomaly (sdaf, see text for more explanation).

|

| 279 |

+

|

| 280 |

+

|

| 281 |

+

# Known limitations

|

| 282 |

+

- This version of AIFS shares certain limitations with some of the other data-driven weather forecast models that are trained with a weighted MSE loss, such as blurring of the forecast fields at longer lead times.

|

| 283 |

+

- AIFS exhibits reduced forecast skill in the stratosphere, partially due to a low model top.

|

| 284 |

+

- AIFS currently provides reduced intensity of some high-impact systems such as tropical cyclones.

|

| 285 |

+

|

| 286 |

+

Please refer to https://confluence.ecmwf.int/display/FCST/Known+AIFS+Forecasting+Issues for further details

|

| 287 |

+

|

| 288 |

+

## Technical Specifications

|

| 289 |

+

|

| 290 |

+

### Hardware

|

| 291 |

+

|

| 292 |

+

<!-- {{ hardware_requirements | default("[More Information Needed]", true)}} -->

|

| 293 |

+

|

| 294 |

+

We acknowledge PRACE for awarding us access to Leonardo, CINECA, Italy. In particular, this version of the AIFS has been trained

|

| 295 |

+

on 64 A100 GPUs (40GB).

|

| 296 |

+

|

| 297 |

+

### Software

|

| 298 |

+

|

| 299 |

+

The model was developed and trained using the [AnemoI framework](https://anemoi-docs.readthedocs.io/en/latest/index.html).

|

| 300 |

+

AnemoI is a framework for developing machine learning weather forecasting models. It comprises of components or packages

|

| 301 |

+

for preparing training datasets, conducting ML model training and a registry for datasets and trained models. AnemoI

|

| 302 |

+

provides tools for operational inference, including interfacing to verification software. As a framework it seeks to

|

| 303 |

+

handle many of the complexities that meteorological organisations will share, allowing them to easily train models from

|

| 304 |

+

existing recipes but with their own data.

|

| 305 |

+

|

| 306 |

+

## Citation

|

| 307 |

+

|

| 308 |

+

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

|

| 309 |

+

|

| 310 |

+

If you use this model in your work, please cite it as follows:

|

| 311 |

+

|

| 312 |

+

**BibTeX:**

|

| 313 |

+

|

| 314 |

+

```

|

| 315 |

+

@article{lang2024aifs,

|

| 316 |

+

title={AIFS-ECMWF's data-driven forecasting system},

|

| 317 |

+

author={Lang, Simon and Alexe, Mihai and Chantry, Matthew and Dramsch, Jesper and Pinault, Florian and Raoult, Baudouin and Clare, Mariana CA and Lessig, Christian and Maier-Gerber, Michael and Magnusson, Linus and others},

|

| 318 |

+

journal={arXiv preprint arXiv:2406.01465},

|

| 319 |

+

year={2024}

|

| 320 |

+

}

|

| 321 |

+

```

|

| 322 |

+

|

| 323 |

+

**APA:**

|

| 324 |

+

|

| 325 |

+

```

|

| 326 |

+

Lang, S., Alexe, M., Chantry, M., Dramsch, J., Pinault, F., Raoult, B., ... & Rabier, F. (2024). AIFS-ECMWF's data-driven forecasting system. arXiv preprint arXiv:2406.01465.

|

| 327 |

+

```

|

| 328 |

+

|

| 329 |

+

|

| 330 |

+

## More Information

|

| 331 |

+

|

| 332 |

+

[Find the paper here](https://arxiv.org/abs/2406.01465)

|

aifs-single-mse-1.0.ckpt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1fed399c097c0127d5bbe074f4f8bbc123759736145d990699c215ff07543ccd

|

| 3 |

+

size 994084883

|

assets/aifs_diagram.png

ADDED

|

Git LFS Details

|

assets/decoder_graph.jpeg

ADDED

|

Git LFS Details

|

assets/encoder_graph.jpeg

ADDED

|

Git LFS Details

|

assets/radiation_cloudcover.gif

ADDED

|

Git LFS Details

|

assets/scorecard_single1.0_vs_ifs_2024.png

ADDED

|

Git LFS Details

|

assets/scorecard_single1.0_vs_single0.2.1_2023.png

ADDED

|

Git LFS Details

|

config_finetuning.yaml

ADDED

|

@@ -0,0 +1,483 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

data:

|

| 2 |

+

format: zarr

|

| 3 |

+

resolution: n320

|

| 4 |

+

frequency: 6h

|

| 5 |

+

timestep: 6h

|

| 6 |

+

forcing:

|

| 7 |

+

- cos_latitude

|

| 8 |

+

- cos_longitude

|

| 9 |

+

- sin_latitude

|

| 10 |

+

- sin_longitude

|

| 11 |

+

- cos_julian_day

|

| 12 |

+

- cos_local_time

|

| 13 |

+

- sin_julian_day

|

| 14 |

+

- sin_local_time

|

| 15 |

+

- insolation

|

| 16 |

+

- lsm

|

| 17 |

+

- sdor

|

| 18 |

+

- slor

|

| 19 |

+

- z

|

| 20 |

+

diagnostic:

|

| 21 |

+

- tp

|

| 22 |

+

- cp

|

| 23 |

+

- sf

|

| 24 |

+

- tcc

|

| 25 |

+

- hcc

|

| 26 |

+

- lcc

|

| 27 |

+

- mcc

|

| 28 |

+

- ro

|

| 29 |

+

- ssrd

|

| 30 |

+

- strd

|

| 31 |

+

- 100u

|

| 32 |

+

- 100v

|

| 33 |

+

remapped: null

|

| 34 |

+

normalizer:

|

| 35 |

+

default: mean-std

|

| 36 |

+

remap:

|

| 37 |

+

cp: tp

|

| 38 |

+

sf: tp

|

| 39 |

+

std:

|

| 40 |

+

- tp

|

| 41 |

+

- cp

|

| 42 |

+

- sf

|

| 43 |

+

- ro

|

| 44 |

+

- tcw

|

| 45 |

+

- ssrd

|

| 46 |

+

- q_50

|

| 47 |

+

- q_100

|

| 48 |

+

- q_150

|

| 49 |

+

- q_200

|

| 50 |

+

- q_250

|

| 51 |

+

- q_300

|

| 52 |

+

- q_400

|

| 53 |

+

- q_500

|

| 54 |

+

- q_600

|

| 55 |

+

- q_700

|

| 56 |

+

- q_850

|

| 57 |

+

- q_925

|

| 58 |

+

- q_1000

|

| 59 |

+

min-max: null

|

| 60 |

+

max:

|

| 61 |

+

- sdor

|

| 62 |

+

- slor

|

| 63 |

+

- z

|

| 64 |

+

none:

|

| 65 |

+

- cos_latitude

|

| 66 |

+

- cos_longitude

|

| 67 |

+

- sin_latitude

|

| 68 |

+

- sin_longitude

|

| 69 |

+

- cos_julian_day

|

| 70 |

+

- cos_local_time

|

| 71 |

+

- sin_julian_day

|

| 72 |

+

- sin_local_time

|

| 73 |

+

- insolation

|

| 74 |

+

- lsm

|

| 75 |

+

- tcc

|

| 76 |

+

- mcc

|

| 77 |

+

- hcc

|

| 78 |

+

- lcc

|

| 79 |

+

- swvl1

|

| 80 |

+

- swvl2

|

| 81 |

+

imputer:

|

| 82 |

+

default: none

|

| 83 |

+

remapper:

|

| 84 |

+

default: none

|

| 85 |

+

processors:

|

| 86 |

+

normalizer:

|

| 87 |

+

_target_: anemoi.models.preprocessing.normalizer.InputNormalizer

|

| 88 |

+

_convert_: all

|

| 89 |

+

config:

|

| 90 |

+

default: mean-std

|

| 91 |

+

remap:

|

| 92 |

+

cp: tp

|

| 93 |

+

sf: tp

|

| 94 |

+

std:

|

| 95 |

+

- tp

|

| 96 |

+

- cp

|

| 97 |

+

- sf

|

| 98 |

+

- ro

|

| 99 |

+

- tcw

|

| 100 |

+

- ssrd

|

| 101 |

+

- q_50

|

| 102 |

+

- q_100

|

| 103 |

+

- q_150

|

| 104 |

+

- q_200

|

| 105 |

+

- q_250

|

| 106 |

+

- q_300

|

| 107 |

+

- q_400

|

| 108 |

+

- q_500

|

| 109 |

+

- q_600

|

| 110 |

+

- q_700

|

| 111 |

+

- q_850

|

| 112 |

+

- q_925

|

| 113 |

+

- q_1000

|

| 114 |

+

min-max: null

|

| 115 |

+

max:

|

| 116 |

+

- sdor

|

| 117 |

+

- slor

|

| 118 |

+

- z

|

| 119 |

+

none:

|

| 120 |

+

- cos_latitude

|

| 121 |

+

- cos_longitude

|

| 122 |

+

- sin_latitude

|

| 123 |

+

- sin_longitude

|

| 124 |

+

- cos_julian_day

|

| 125 |

+

- cos_local_time

|

| 126 |

+

- sin_julian_day

|

| 127 |

+

- sin_local_time

|

| 128 |

+

- insolation

|

| 129 |

+

- lsm

|

| 130 |

+

- tcc

|

| 131 |

+

- mcc

|

| 132 |

+

- hcc

|

| 133 |

+

- lcc

|

| 134 |

+

- swvl1

|

| 135 |

+

- swvl2

|

| 136 |

+

num_features: 115

|

| 137 |

+

|

| 138 |

+

dataloader:

|

| 139 |

+

prefetch_factor: 2

|

| 140 |

+

pin_memory: True

|

| 141 |

+

read_group_size: 4

|

| 142 |

+

num_workers:

|

| 143 |

+

training: 8

|

| 144 |

+

validation: 8

|

| 145 |

+

test: 8

|

| 146 |

+

predict: 8

|

| 147 |

+

batch_size:

|

| 148 |

+

training: 1

|

| 149 |

+

validation: 1

|

| 150 |

+

test: 4

|

| 151 |

+

predict: 4

|

| 152 |

+

limit_batches:

|

| 153 |

+

training: 1000

|

| 154 |

+

validation: 10

|

| 155 |

+

test: 20

|

| 156 |

+

predict: 20

|

| 157 |

+

dataset: ${hardware.paths.data}/${hardware.files.dataset}

|

| 158 |

+

land_dataset: ${hardware.paths.data}/${hardware.files.dataset_land}

|

| 159 |

+

land_variables: [100u, 100v, swvl1, swvl2, stl1, stl2, tcc, lcc, mcc, hcc, sf, ro, strd, ssrd]

|

| 160 |

+

training:

|

| 161 |

+

dataset:

|

| 162 |

+

- dataset: ${dataloader.dataset}

|

| 163 |

+

start: null

|

| 164 |

+

end: 2022

|

| 165 |

+

frequency: ${data.frequency}

|

| 166 |

+

drop: []

|

| 167 |

+

- dataset: ${dataloader.land_dataset}

|

| 168 |

+

start: null

|

| 169 |

+

end: 2022

|

| 170 |

+

frequency: ${data.frequency}

|

| 171 |

+

select: ${dataloader.land_variables}

|

| 172 |

+

start: null

|

| 173 |

+

end: 2022

|

| 174 |

+

drop: []

|

| 175 |

+

validation:

|

| 176 |

+

dataset:

|

| 177 |

+

- dataset: ${dataloader.dataset}

|

| 178 |

+

start: 2022

|

| 179 |

+

end: 2022

|

| 180 |

+

frequency: ${data.frequency}

|

| 181 |

+

drop: []

|

| 182 |

+

- dataset: ${dataloader.land_dataset}

|

| 183 |

+

start: 2022

|

| 184 |

+

end: 2022

|

| 185 |

+

frequency: ${data.frequency}

|

| 186 |

+

select: ${dataloader.land_variables}

|

| 187 |

+

start: 2022

|

| 188 |

+

end: 2022

|

| 189 |

+

drop: []

|

| 190 |

+

validation_rollout: 1

|

| 191 |

+

|

| 192 |

+

diagnostics:

|

| 193 |

+

plot:

|

| 194 |

+

asynchronous: False

|

| 195 |

+

datashader: True

|

| 196 |

+

frequency:

|

| 197 |

+

batch: 750

|

| 198 |

+

epoch: 10

|

| 199 |

+

parameters: [tp]

|

| 200 |

+

sample_idx: 0

|

| 201 |

+

precip_and_related_fields: [tp, cp]

|

| 202 |

+

callbacks: []

|

| 203 |

+

enabled: True

|

| 204 |

+

scatter: False

|

| 205 |

+

mode: asyncio

|

| 206 |

+

callbacks: {}

|

| 207 |

+

benchmark_profiler:

|

| 208 |

+

memory:

|

| 209 |

+

enabled: True

|

| 210 |

+

steps: 5

|

| 211 |

+

warmup: 2

|

| 212 |

+

extra_plots: False

|

| 213 |

+

trace_rank0_only: False

|

| 214 |

+

time:

|

| 215 |

+

enabled: True

|

| 216 |

+

verbose: False

|

| 217 |

+

speed:

|

| 218 |

+

enabled: True

|

| 219 |

+

system:

|

| 220 |

+

enabled: True

|

| 221 |

+

model_summary:

|

| 222 |

+

enabled: True

|

| 223 |

+

snapshot:

|

| 224 |

+

enabled: True

|

| 225 |

+

steps: 4

|

| 226 |

+

warmup: 0

|

| 227 |

+

debug:

|

| 228 |

+

anomaly_detection: False

|

| 229 |

+

profiler: False

|

| 230 |

+

enable_checkpointing: True

|

| 231 |

+

checkpoint:

|

| 232 |

+

every_n_minutes:

|

| 233 |

+

save_frequency: 30

|

| 234 |

+

num_models_saved: 3

|

| 235 |

+

every_n_epochs:

|

| 236 |

+

save_frequency: 1

|

| 237 |

+

num_models_saved: 3

|

| 238 |

+

every_n_train_steps:

|

| 239 |

+

save_frequency: null

|

| 240 |

+

num_models_saved: 0

|

| 241 |

+

log:

|

| 242 |

+

wandb:

|

| 243 |

+

enabled: False

|

| 244 |

+

tensorboard:

|

| 245 |

+

enabled: False

|

| 246 |

+

mlflow:

|

| 247 |

+

enabled: False

|

| 248 |

+

interval: 100

|

| 249 |

+

enable_progress_bar: True

|

| 250 |

+

print_memory_summary: False

|

| 251 |

+

|

| 252 |

+

hardware:

|

| 253 |

+

paths:

|

| 254 |

+

data: ${oc.decode:${oc.env:DATASETS_PATH}}

|

| 255 |

+

output: ${oc.decode:${oc.env:OUTPUT_DIR}}

|

| 256 |

+

logs:

|

| 257 |

+

base: ${hardware.paths.output}/logs

|

| 258 |

+

wandb: ${hardware.paths.output}/logs/wandb

|

| 259 |

+

mlflow: ${hardware.paths.output}/logs/mlflow

|

| 260 |

+

tensorboard: ${hardware.paths.output}/logs/tensorboard

|

| 261 |

+

checkpoints: ${hardware.paths.output}/checkpoint/

|

| 262 |

+

plots: ${hardware.paths.output}/plots/

|

| 263 |

+

profiler: ${hardware.paths.output}/profiler/

|

| 264 |

+

graph: ${hardware.paths.output}/graphs/

|

| 265 |

+

files:

|

| 266 |

+

dataset: aifs-od-an-oper-0001-mars-n320-2016-2023-6h-v6.zarr

|

| 267 |

+

dataset_land: aifs-od-an-oper-0001-mars-n320-2016-2023-6h-v1-land.zarr

|

| 268 |

+

graph: graph_enc_proc_dec_n320.pt

|

| 269 |

+

checkpoint:

|

| 270 |

+

every_n_epochs: aifs-by_epoch-epoch_{epoch:03d}-val_wmse_{val_wmse:.3e}

|

| 271 |

+

every_n_train_steps: aifs-by_step-epoch_{epoch:03d}-step_{step:06d}

|

| 272 |

+

every_n_minutes: aifs-by_time-epoch_{epoch:03d}-step_{step:06d}

|

| 273 |

+

warm_start: null

|

| 274 |

+

accelerator: auto

|

| 275 |

+

num_gpus_per_node: 4

|

| 276 |

+

num_nodes: 16

|

| 277 |

+

num_gpus_per_model: 4

|

| 278 |

+

|

| 279 |

+

graph:

|

| 280 |

+

overwrite: True

|

| 281 |

+

data: data

|

| 282 |

+

hidden: hidden

|

| 283 |

+

nodes:

|

| 284 |

+

data:

|

| 285 |

+

node_builder:

|

| 286 |

+

_target_: anemoi.graphs.nodes.ZarrDatasetNodes

|

| 287 |

+

dataset: ${dataloader.dataset}

|

| 288 |

+

attributes:

|

| 289 |

+

area_weight:

|

| 290 |

+

_target_: anemoi.graphs.nodes.attributes.AreaWeights

|

| 291 |

+

norm: unit-max

|

| 292 |

+

hidden:

|

| 293 |

+

node_builder:

|

| 294 |

+

_target_: anemoi.graphs.nodes.ReducedGaussianGridNodes

|

| 295 |

+

grid: o96

|

| 296 |

+

edges:

|

| 297 |

+

- source_name: data

|

| 298 |

+

target_name: hidden

|

| 299 |

+

edge_builder:

|

| 300 |

+

_target_: anemoi.graphs.edges.CutOffEdges

|

| 301 |

+

cutoff_factor: 0.6

|

| 302 |

+

attributes:

|

| 303 |

+

edge_length:

|

| 304 |

+

_target_: anemoi.graphs.edges.attributes.EdgeLength

|

| 305 |

+

norm: unit-std

|

| 306 |

+

edge_dirs:

|

| 307 |

+

_target_: anemoi.graphs.edges.attributes.EdgeDirection

|

| 308 |

+

norm: unit-std

|

| 309 |

+

- source_name: hidden

|

| 310 |

+

target_name: data

|

| 311 |

+

edge_builder:

|

| 312 |

+

_target_: anemoi.graphs.edges.KNNEdges

|

| 313 |

+

num_nearest_neighbours: 3

|

| 314 |

+

attributes:

|

| 315 |

+

edge_length:

|

| 316 |

+

_target_: anemoi.graphs.edges.attributes.EdgeLength

|

| 317 |

+

norm: unit-std

|

| 318 |

+

edge_dirs:

|

| 319 |

+

_target_: anemoi.graphs.edges.attributes.EdgeDirection

|

| 320 |

+

norm: unit-std

|

| 321 |

+

attributes:

|

| 322 |

+

nodes:

|

| 323 |

+

area_weight:

|

| 324 |

+

_target_: anemoi.graphs.nodes.attributes.AreaWeights

|

| 325 |

+

norm: unit-max

|

| 326 |

+

edges:

|

| 327 |

+

edge_length:

|

| 328 |

+

_target_: anemoi.graphs.edges.attributes.EdgeLength

|

| 329 |

+

norm: unit-std

|

| 330 |

+

edge_dirs:

|

| 331 |

+

_target_: anemoi.graphs.edges.attributes.EdgeDirection

|

| 332 |

+

norm: unit-std

|

| 333 |

+

|

| 334 |

+

model:

|

| 335 |

+

activation: GELU

|

| 336 |

+

num_channels: 1024

|

| 337 |

+

model:

|

| 338 |

+

_target_: anemoi.models.models.encoder_processor_decoder.AnemoiModelEncProcDec

|

| 339 |

+

processor:

|

| 340 |

+

_target_: anemoi.models.layers.processor.TransformerProcessor

|

| 341 |

+

_convert_: all

|

| 342 |

+

activation: GELU

|

| 343 |

+

num_layers: 16

|

| 344 |

+

num_chunks: 2

|

| 345 |

+

mlp_hidden_ratio: 4

|

| 346 |

+

num_heads: 16

|

| 347 |

+

window_size: 1120

|

| 348 |

+

dropout_p: 0.0

|

| 349 |

+

encoder:

|

| 350 |

+

_target_: anemoi.models.layers.mapper.GraphTransformerForwardMapper

|

| 351 |

+

_convert_: all

|

| 352 |

+

trainable_size: 8

|

| 353 |

+

sub_graph_edge_attributes: [edge_length, edge_dirs]

|

| 354 |

+

activation: GELU

|

| 355 |

+

num_chunks: 1

|

| 356 |

+

mlp_hidden_ratio: 4

|

| 357 |

+

num_heads: 16

|

| 358 |

+

decoder:

|

| 359 |

+

_target_: anemoi.models.layers.mapper.GraphTransformerBackwardMapper

|

| 360 |

+

_convert_: all

|

| 361 |

+

trainable_size: 8

|

| 362 |

+

sub_graph_edge_attributes: [edge_length, edge_dirs]

|

| 363 |

+

activation: GELU

|

| 364 |

+

num_chunks: 1

|

| 365 |

+

mlp_hidden_ratio: 4

|

| 366 |

+

num_heads: 16

|

| 367 |

+

trainable_parameters:

|

| 368 |

+

data: 8

|

| 369 |

+

hidden: 8

|

| 370 |

+

data2hidden: 8

|

| 371 |

+

hidden2data: 8

|

| 372 |

+

attributes:

|

| 373 |

+

edges: [edge_length, edge_dirs]

|

| 374 |

+

nodes: []

|

| 375 |

+

node_loss_weight: area_weight

|

| 376 |

+

bounding:

|

| 377 |

+

- _target_: anemoi.models.layers.bounding.ReluBounding

|

| 378 |

+

variables:

|

| 379 |

+

- tp

|

| 380 |

+

- ro

|

| 381 |

+

- tcw

|

| 382 |

+

- ssrd

|

| 383 |

+

- q_50

|

| 384 |

+

- q_100

|

| 385 |

+

- q_150

|

| 386 |

+

- q_200

|

| 387 |

+

- q_250

|

| 388 |

+

- q_300

|

| 389 |

+

- q_400

|

| 390 |

+

- q_500

|

| 391 |

+

- q_600

|

| 392 |

+

- q_700

|

| 393 |

+

- q_850

|

| 394 |

+

- q_925

|

| 395 |

+

- q_1000

|

| 396 |

+

- _target_: anemoi.models.layers.bounding.HardtanhBounding

|

| 397 |

+

variables: [tcc, swvl1, swvl2]

|

| 398 |

+

min_val: 0

|

| 399 |

+

max_val: 1

|

| 400 |

+

- _target_: anemoi.models.layers.bounding.FractionBounding

|

| 401 |

+

variables: [cp, sf]

|

| 402 |

+

min_val: 0

|

| 403 |

+

max_val: 1

|

| 404 |

+

total_var: tp

|

| 405 |

+

- _target_: anemoi.models.layers.bounding.FractionBounding

|

| 406 |

+

variables: [lcc, mcc, hcc]

|

| 407 |

+

min_val: 0

|

| 408 |

+

max_val: 1

|

| 409 |

+

total_var: tcc

|

| 410 |

+

|

| 411 |

+

training:

|

| 412 |

+

run_id: null

|

| 413 |

+

fork_run_id: ${oc.decode:${oc.env:PRETRAINING_RUN_ID}}

|

| 414 |

+

load_weights_only: True

|

| 415 |

+

deterministic: False

|

| 416 |

+

precision: 16-mixed

|

| 417 |

+

multistep_input: 2

|

| 418 |

+

accum_grad_batches: 1

|

| 419 |

+

num_sanity_val_steps: 6

|

| 420 |

+

gradient_clip:

|

| 421 |

+

val: 32.0

|

| 422 |

+

algorithm: value

|

| 423 |

+

swa:

|

| 424 |

+

enabled: False

|

| 425 |

+

lr: 0.0001

|

| 426 |

+

zero_optimizer: False

|

| 427 |

+

training_loss:

|

| 428 |

+

_target_: anemoi.training.losses.mse.WeightedMSELoss

|

| 429 |

+

scalars:

|

| 430 |

+

- variable

|

| 431 |

+

- loss_weights_mask

|

| 432 |

+

ignore_nans: False

|

| 433 |

+

loss_gradient_scaling: False

|

| 434 |

+

validation_metrics:

|

| 435 |

+

- _target_: anemoi.training.losses.mse.WeightedMSELoss

|

| 436 |

+

scalars: []

|

| 437 |

+

ignore_nans: True

|

| 438 |

+

rollout:

|

| 439 |

+

start: 1

|

| 440 |

+

epoch_increment: 1

|

| 441 |

+

max: 12

|

| 442 |

+

max_epochs: 13

|

| 443 |

+

max_steps: 150000

|

| 444 |

+

lr:

|

| 445 |

+

rate: 8.0e-7

|

| 446 |

+

iterations: 7900

|

| 447 |

+

min: 3.0e-7

|

| 448 |

+

warmup_t: 100

|

| 449 |

+

variable_loss_scaling:

|

| 450 |

+

default: 1

|

| 451 |

+

pl:

|

| 452 |

+

q: 0.6

|

| 453 |

+

t: 6

|

| 454 |

+

u: 0.8

|

| 455 |

+

v: 0.5

|

| 456 |

+

w: 0.001

|

| 457 |

+

z: 12

|

| 458 |

+

sfc:

|

| 459 |

+

sp: 10

|

| 460 |

+