File size: 1,130 Bytes

97cb585 b385e6a 97cb585 b385e6a 99b4680 b385e6a 58177cc b385e6a e7d321a b385e6a |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

---

license: mit

language:

- en

library_name: transformers

pipeline_tag: feature-extraction

---

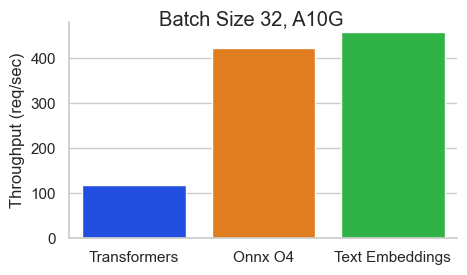

# BGE-Large-En-V1.5-ONNX-O4

This is an `ONNX O4` strategy optimized version of [BAAI/bge-large-en-v1.5](https://huggingface.co/BAAI/bge-large-en-v1.5) optimal for `Cuda`. It should be much faster than the original

version.

## Usage

```python

# pip install "optimum[onnxruntime-gpu]" transformers

from optimum.onnxruntime import ORTModelForFeatureExtraction

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained('hooman650/bge-large-en-v1.5-onnx-o4')

model = ORTModelForFeatureExtraction.from_pretrained('hooman650/bge-large-en-v1.5-onnx-o4')

model.to("cuda")

pairs = ["pandas usually live in the jungles"]

with torch.no_grad():

inputs = tokenizer(pairs, padding=True, truncation=True, return_tensors='pt', max_length=512)

sentence_embeddings = model(**inputs)[0][:, 0]

# normalize embeddings

sentence_embeddings = torch.nn.functional.normalize(sentence_embeddings, p=2, dim=1)

``` |