Update README.md

Browse files

README.md

CHANGED

|

@@ -17,17 +17,24 @@ language:

|

|

| 17 |

|

| 18 |

---

|

| 19 |

|

| 20 |

-

|

| 21 |

|

| 22 |

-

|

| 23 |

|

| 24 |

-

|

| 25 |

|

| 26 |

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 27 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 28 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

| 29 |

- For finalization, a TIES merge.

|

| 30 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

### Ancestor Models:

|

| 32 |

|

| 33 |

**Top influences:** These ancestors are base models and present in the model_stocks, but are heavily re-emphasized in the DELLA and SLERP merges.

|

|

@@ -38,9 +45,7 @@ Thanks go to @arcee-ai's team for the bounties of mergekit, and to @CultriX for

|

|

| 38 |

|

| 39 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 40 |

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

### Merge Strategy:

|

| 44 |

|

| 45 |

```yaml

|

| 46 |

name: lamarck-14b-reason-della # This contributes the knowledge and reasoning pool, later to be merged

|

|

|

|

| 17 |

|

| 18 |

---

|

| 19 |

|

| 20 |

+

### Overview:

|

| 21 |

|

| 22 |

+

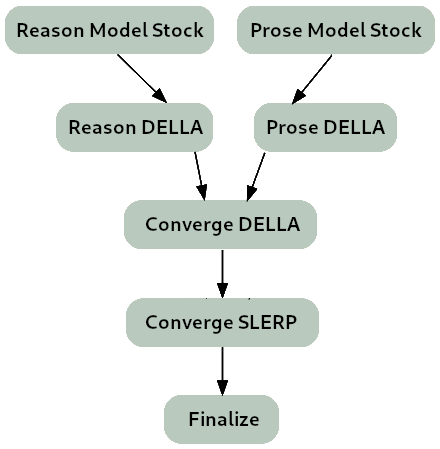

Lamarck-14B version 0.3 is the product of a custom toolchain built around multi-stage templated merges, with an end-to-end strategy for giving each ancestor model priority where it's most effective. It is strongly based on [arcee-ai/Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small) as a diffuse influence for prose and reasoning. Arcee's pioneering use of distillation and innovative merge techniques create a diverse knowledge pool for its models.

|

| 23 |

|

| 24 |

+

** The merge strategy of Lamarck 0.3 can be summarized as:**

|

| 25 |

|

| 26 |

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 27 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 28 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

| 29 |

- For finalization, a TIES merge.

|

| 30 |

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

### Thanks go to:

|

| 34 |

+

|

| 35 |

+

- @arcee-ai's team for the bounties of mergekit and the exceptional Virtuoso Small model

|

| 36 |

+

- @CultriX for the helpful examples of memory-efficient sliced merges and evolutionary merging. Their contribution of tinyevals on version 0.1 of Lamarck did much to validate the hypotheses of the process used here.

|

| 37 |

+

|

| 38 |

### Ancestor Models:

|

| 39 |

|

| 40 |

**Top influences:** These ancestors are base models and present in the model_stocks, but are heavily re-emphasized in the DELLA and SLERP merges.

|

|

|

|

| 45 |

|

| 46 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 47 |

|

| 48 |

+

### Merge YAML:

|

|

|

|

|

|

|

| 49 |

|

| 50 |

```yaml

|

| 51 |

name: lamarck-14b-reason-della # This contributes the knowledge and reasoning pool, later to be merged

|